Project Overview

Windows system is exploring how to enhance work efficiency and free users' hands for more meaningful tasks with AI technology.

In the summer of 2024, I interned at Microsoft and worked on an innovative AI project that explored the potential of AI technology beyond conversational AI. Collaborating with the AI research team, we investigated various approaches to productize AI with screenshot-based methods, ultimately developing a task operating AI agent MVP.

Adopting new technology often presents challenges in building trust between users and the system. Following Microsoft's Human-AI Interaction principles, I explored various methods to reduce learning curves and foster trust in a system that could potentially take control of users' devices.

As a leader in AI technology, Microsoft continues to push the boundaries of what's possible. After launching Copilot, the company is now striving to transcend the limitations of conversational AI and develop an AI agent designed to truly assist users in completing tasks.

This is an engineer-led project aimed at exploring the possibilities of screenshot-based AI technology. As the only product designer involved, my role is to explore using scenarios, understand users' expectations, design an MVP product prototype for exploration, design and conduct a evaluative research.

Thus, the design task I was handed was designing an intuitive AgentOS MVP experience for users to operate tasks automatically.

I came up with this requirement from engineers

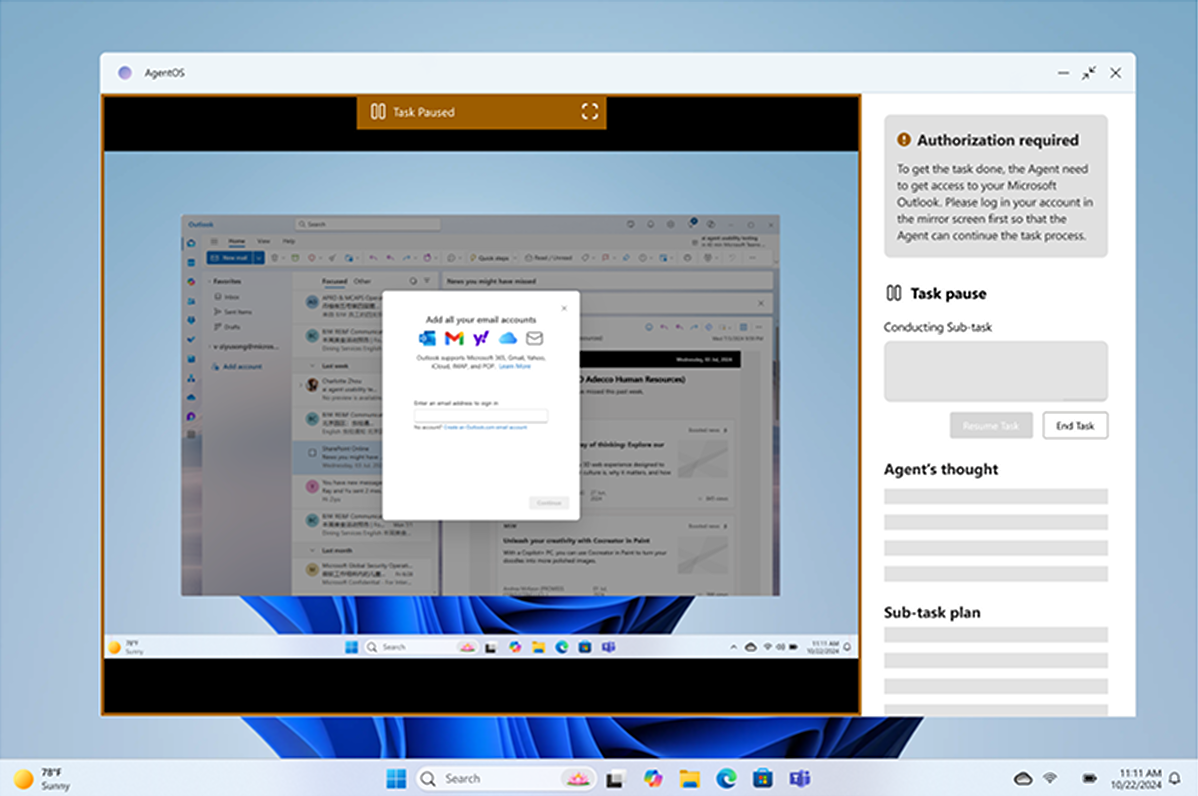

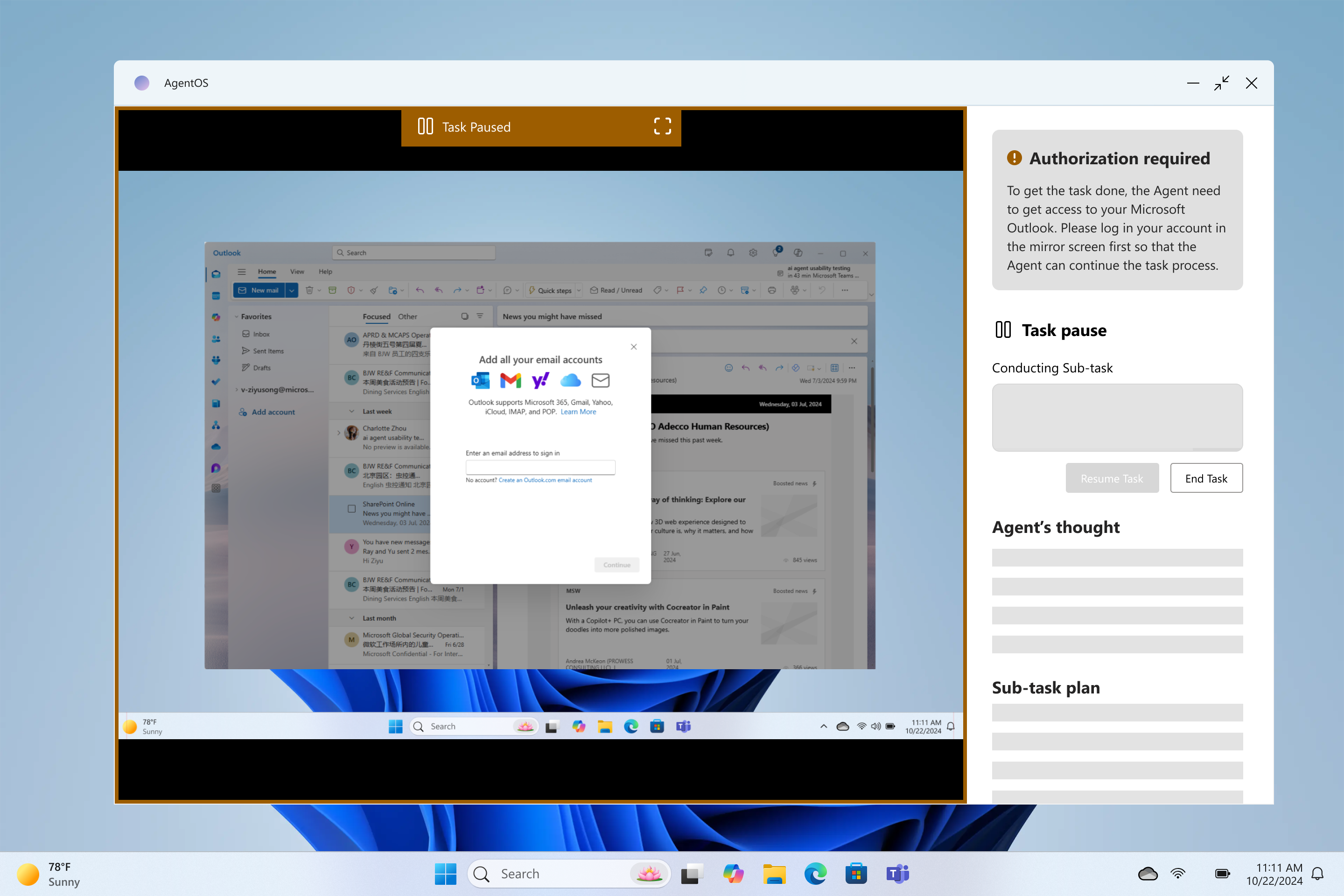

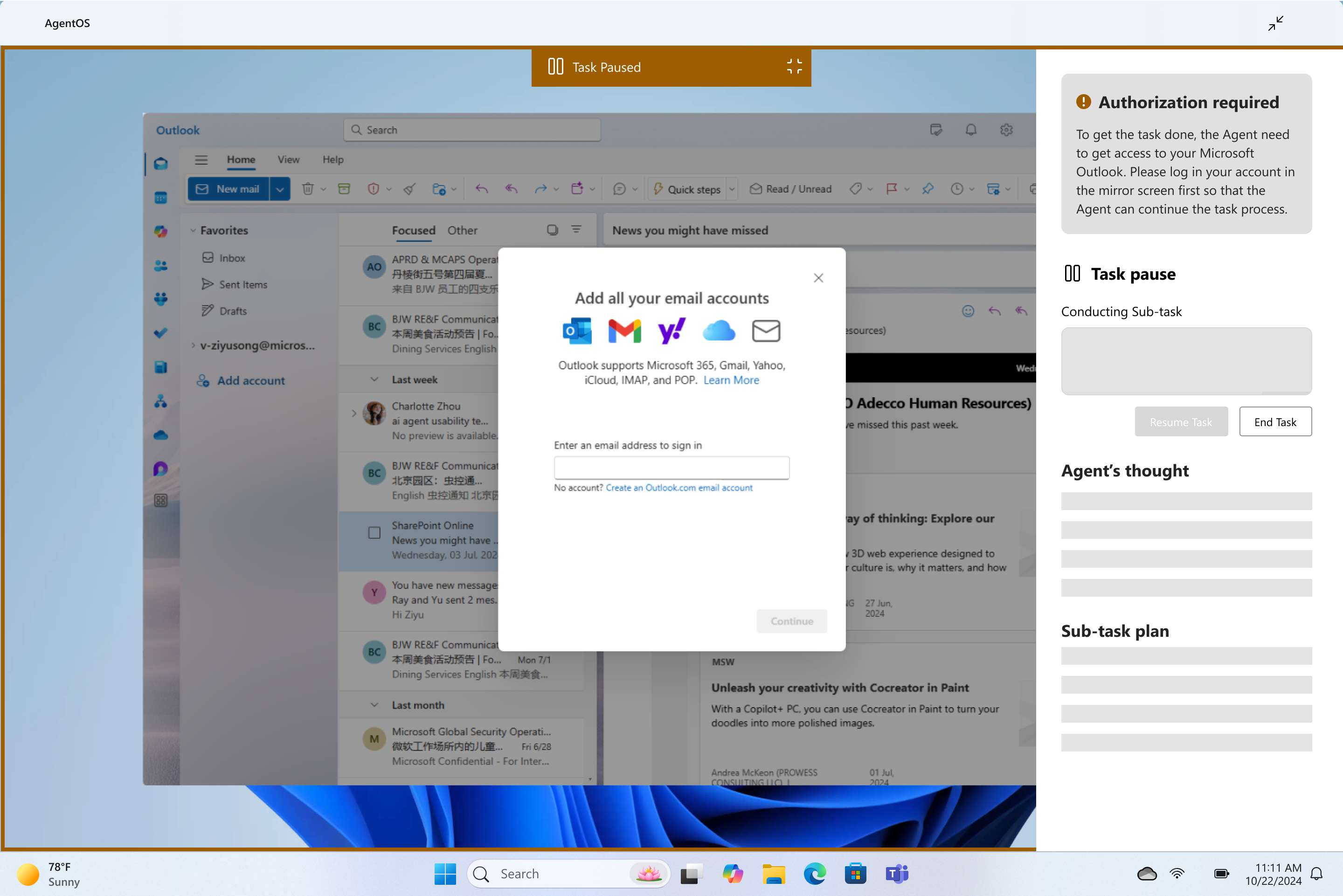

There are three method options for this product that MML can be applied on task execution. I listed pros and cons from user's experience perspective, discussed with the PM and engineers on which approach is feasible. Due to the limitations of the Windows Operating System and the out-of-date API on existing windows applications, we ultimately chose to adopt the picture-in-picture (PiP) approach, cloning the user's interface to enable the AI agent to control the complete tasks based on screenshots.

Screenshot-Based Methods

Textual Parsing Methods

Taking Control of the Cursor

Operating within picture-in-picture

Run tasks at backstage with API

Pros

1. Commonly used: Agent can operate most tasks by directly control the cursor and conduct on desktop

2. Transparence: Easy for users to track task operation

Pros

1. Synchroneity: Agent can perform tasks simultaneously while users operate the device.

2. Transparence: Users can monitor agent's operation to prevent damage or errors in the visualized task working process

Pros

1. Non-distraction: Agent can operating tasks with minimum distraction to users

2. Efficiency: The operation process is faster

Cons

1. Unproductivty: The desktop is occupied by agent's operation

2.Irritablity: Can be intervened by mouse movement easily

Cons

1. High resource demand: Requires significant compute resources to process visual data and translate it into actionable insights.

2. Irritablity: Can be intervened by image noice

Cons

1. Non-transparence: Can't visualized operation process. It is hard for users to identify potential damage, errors, and operation quality.

2. Error-prone: May lose critical context when simplifying complex DOM structures

Thus, I asked the question

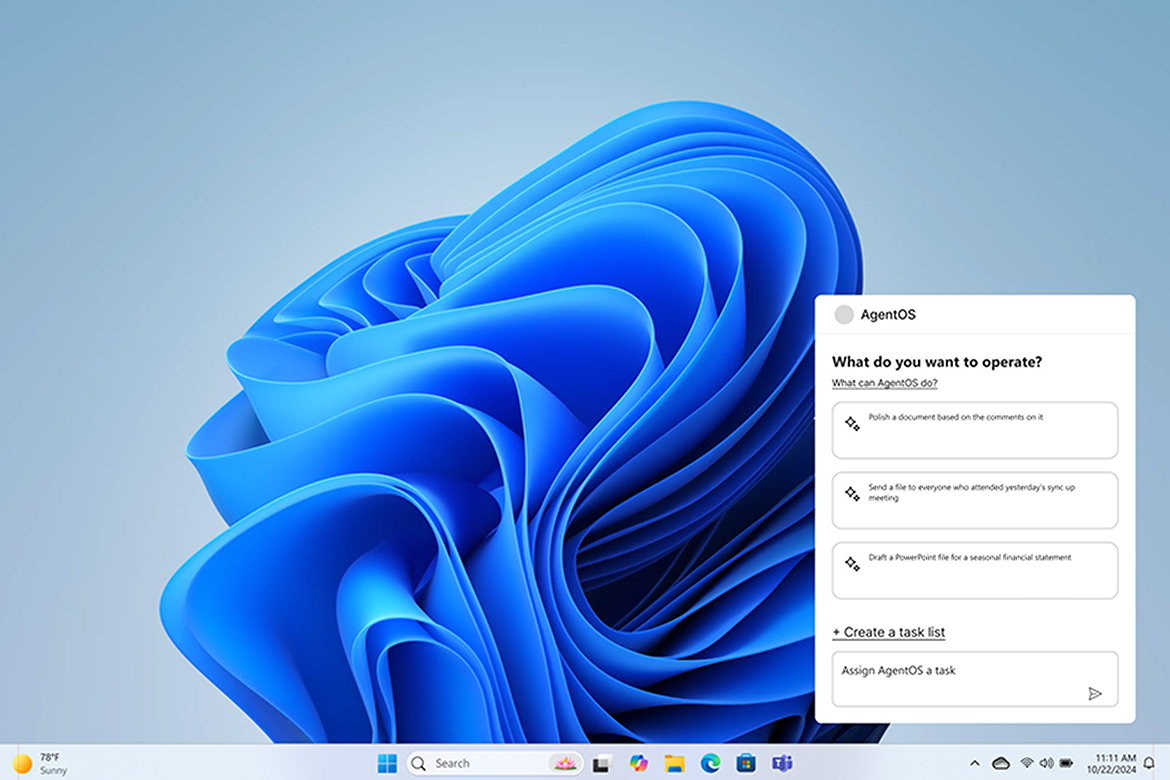

According to the users flow, I started to explore several possible main page layout with Mid-fi prototype. I firstly explored the design of input window:

❌ Looks like a conversational AI chat bot. Users might be confused on this appearance

✅ The interface consists of two primary sections: task suggestions and an input field, ensuring clarity for users

✅ Task list creation as an advanced feature

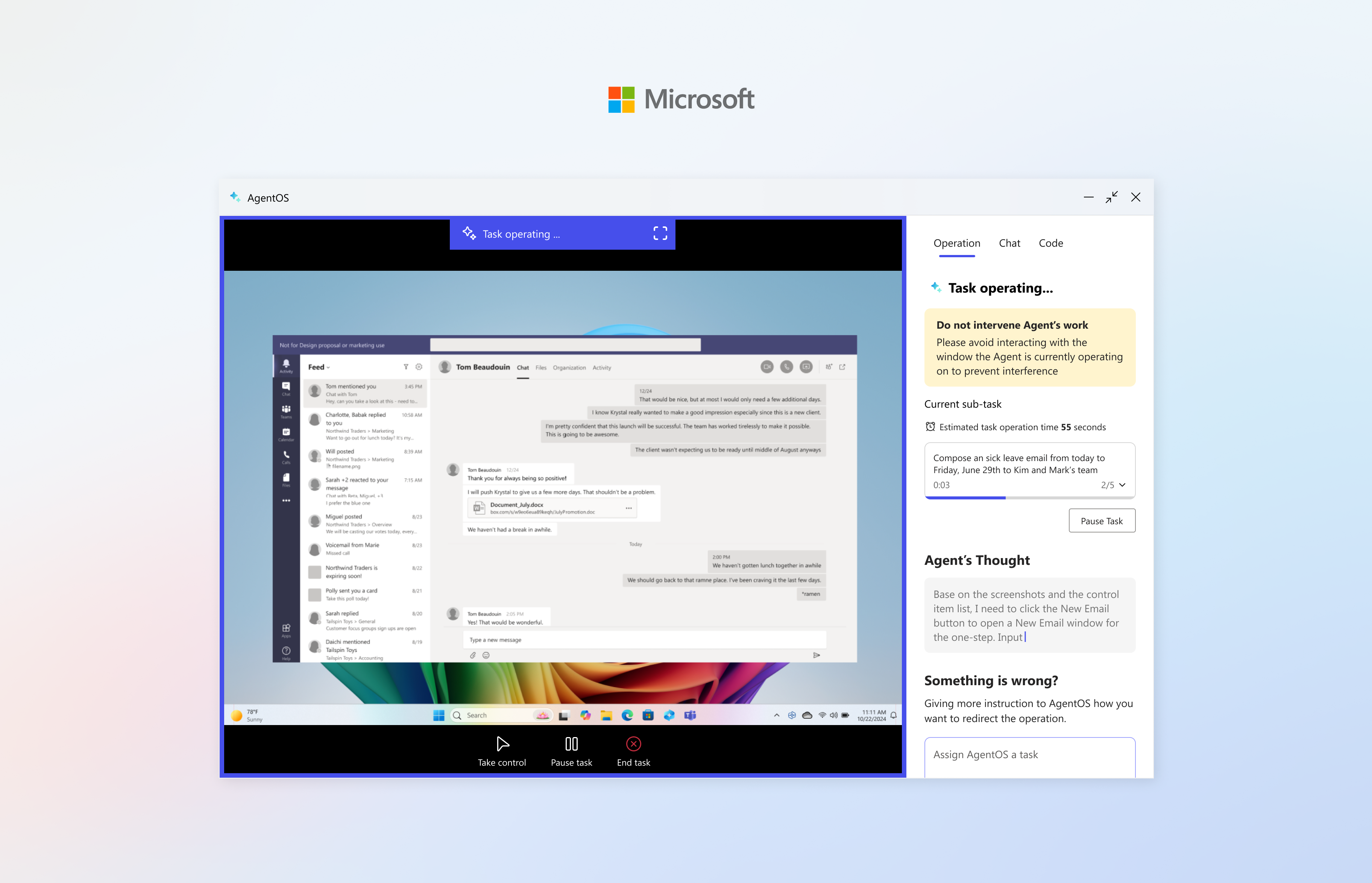

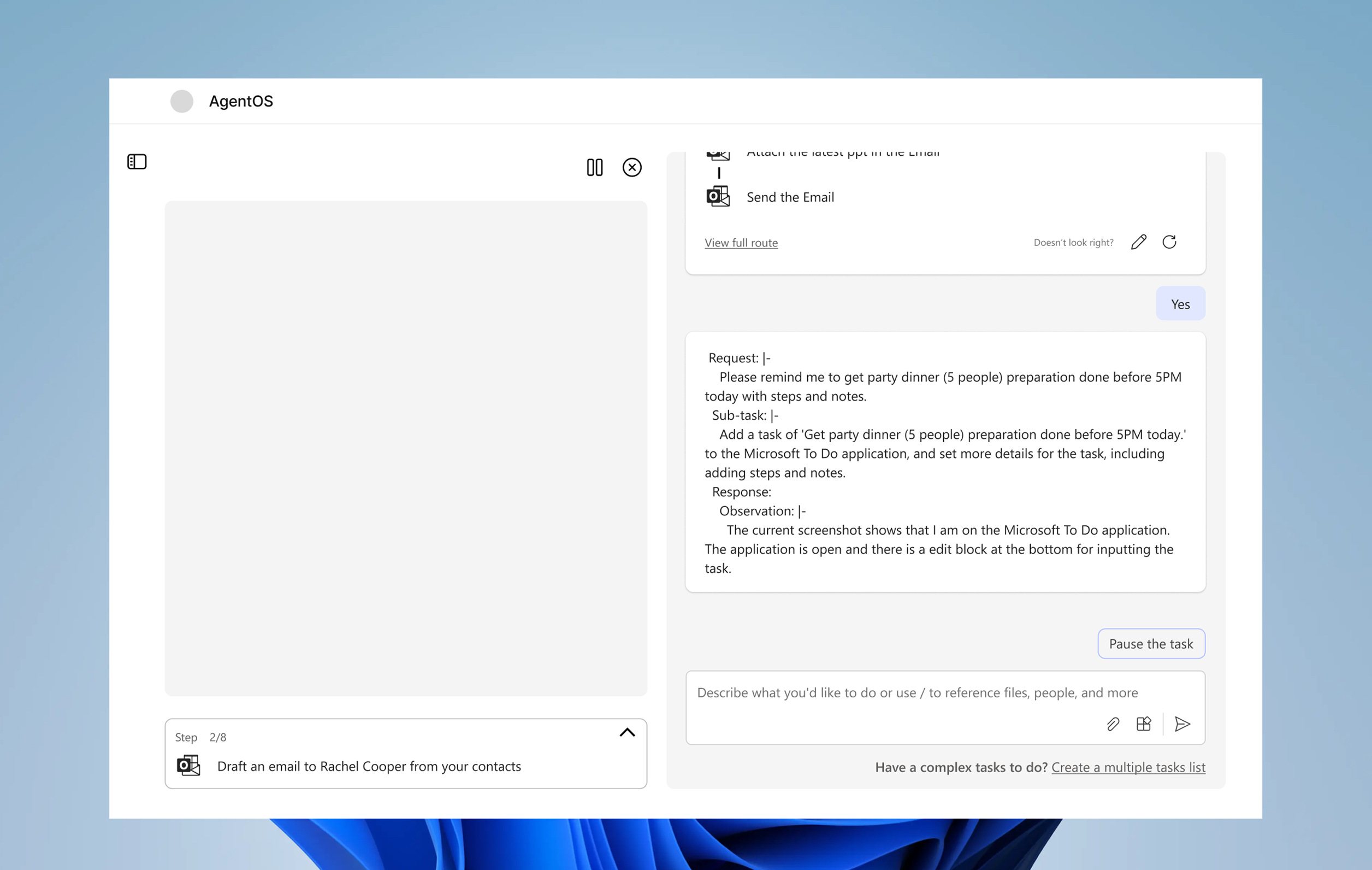

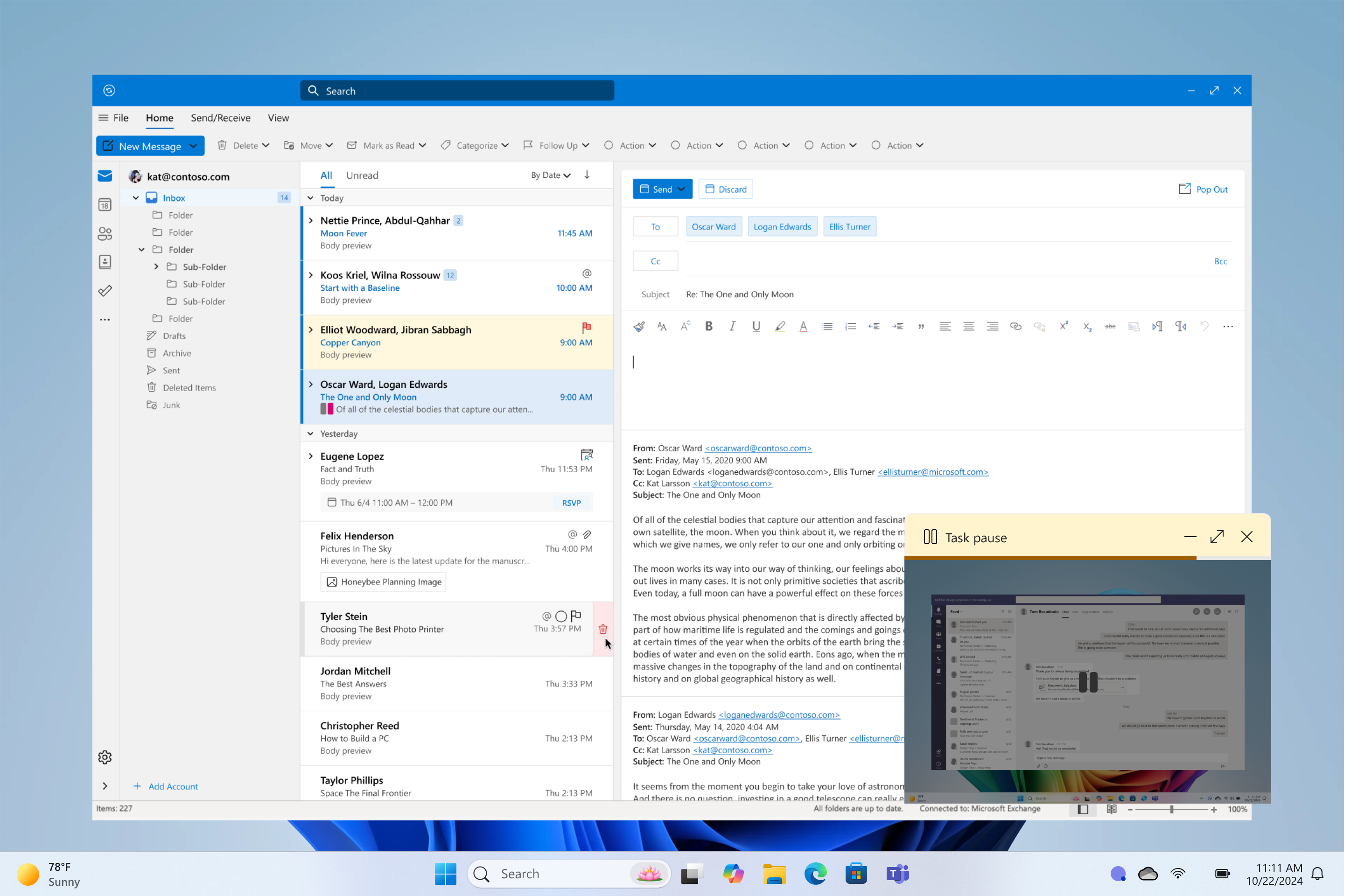

In terms of the task monitor window, I tried several options on window size and layout format, eventually came up with a interface layout:

✅ Intuit chat interaction with the operational AI

❌ Display all agent running backstage data may overwhelm users

✅ Only display essential data to help users understand operation process

✅ Easy to monitor task operation in the mirrored desktop

Finally, I develop the monitor window as three various size and operation detail display:

Size S: small monitor screen floating on the desktop

Size M: monitor screen with side panel

Size L: Full screen task monitor

This project was developed in an engineer-led environment, where technology takes precedence, and designers collaborate with cross-functional teams to explore its potential. Unlike traditional design processes, where problems are clearly defined before solutions are proposed, technical solutions often emerge first in this setting. To thrive in such an environment, designers must work closely with engineers, proactively understanding technological constraints and capabilities while adapting their design approach to align with evolving technical solutions.

Designing for human-AI collaboration requires a nuanced approach that balances user needs with AI capabilities. While traditional UX methods remain valuable, they must be adapted to accommodate AI’s unpredictability, evolving behavior, and decision-making processes. First, designers must develop a deep understanding of both user needs and AI capabilities to create meaningful interactions. Additionally, incorporating AI into the prototyping and iteration process allows for continuous improvement, ensuring a seamless and adaptive user experience.